Visual Search Difficulty Data Set

by Radu Tudor Ionescu, Bogdan Alexe, Marius Leordeanu, Marius Popescu, Dim Papadopoulos, Vittorio Ferrari

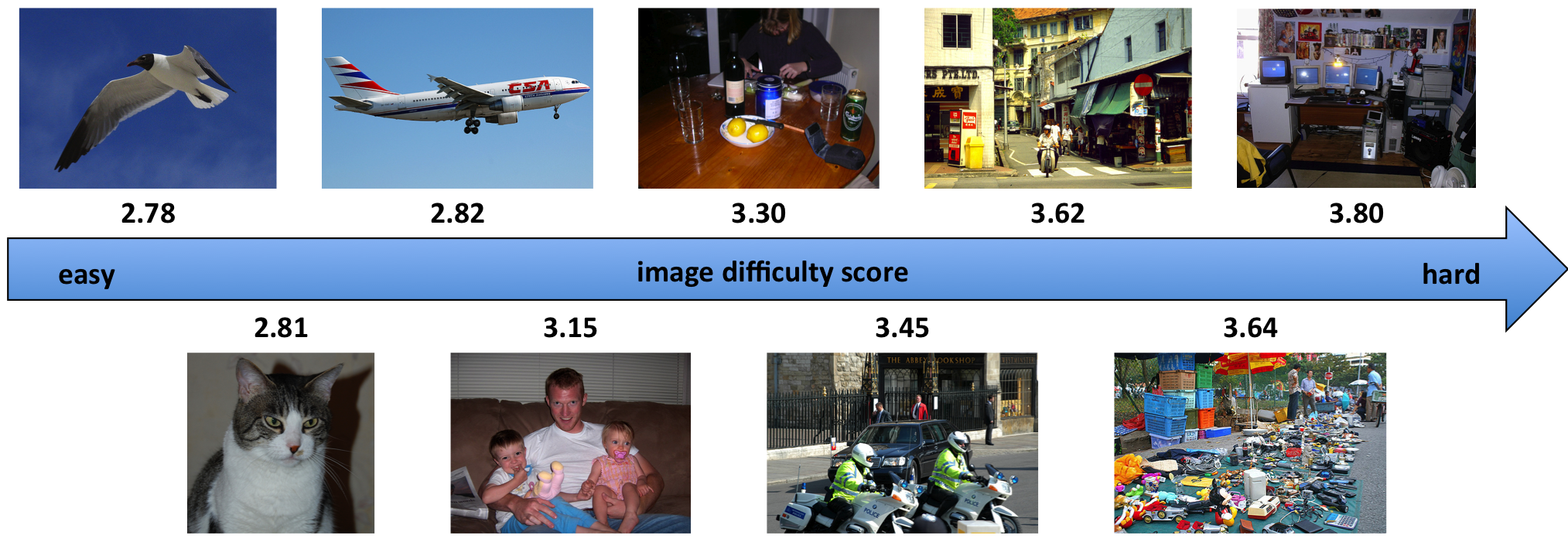

In [1], we address the problem of estimating image difficulty defined as the human response time for solving a visual search task. We collected human annotations of image difficulty (available here) for the Pascal VOC 2012 data set through CrowdFlower. We then analyzed what human interpretable image properties can have an impact on visual search difficulty, and how accurate are those properties for predicting difficulty. Next, we developed a regression model based on deep features learned with state of the art convolutional neural networks and show better results for predicting the ground-truth visual search difficulty scores produced by human annotators. The following figure shows a few images with difficulty scores predicted by our system in increasing order of their difficulty.

Data Set

The Visual Search Difficulty 1.0 data set contains difficulty scores collected for the Pascal VOC 2012. The data used in [1] was collected through CrowdFlower. The Visual Search Difficulty 1.0 data set is available for download and use under the GNU Public License.

Difficulty Prediction Results

Our model is able to correctly rank about 75% image pairs in the same order as given by their ground-truth difficulty scores. We show that our difficulty predictor generalizes well across new classes. Finally, we demonstrate that our predicted difficulty scores are useful in the context of weakly supervised object localization (8% improvement) and semi-supervised object classification (1% improvement).

Open Source Code

The Visual Search Difficulty Prediction Model 1.0 contains code to build a regression model for predicting ground-truth visual search difficulty scores. The provided model is based on deep features and Support Vector Regression. The code is available for download and use under the GNU Public License.

Human Agreement

To compute the agreement among humans, we considered 58 trusted annotators who annotated the same set of 56 images. We compute the Kendall's tau correlation following a one-versus-all scheme, comparing the response time of an annotator with the mean response time of all annotators. We obtained the mean value of 0.562 means that the average human ranks about 80% image pairs in the same order as given by the mean response time of all annotators. This high level of agreement among humans demonstrates that visual search difficulty can indeed be consistently measured. The average human performance is above the performance of our difficulty predictor (0.434) when it is evaluated on the same images using an identical testing protocol.

Citation

If you use our data set or code in any scientific work, please cite the corresponding work:

[1] Radu Tudor Ionescu, Bogdan Alexe, Marius Leordeanu, Marius Popescu, Dimitrios Papadopoulos, Vittorio Ferrari. How hard can it be? Estimating the difficulty of visual search in an image. Proceedings of CVPR, pp. 2157–2166, 2016. [BibTeX]

More Details

Read the paper or take a quick look at the poster for more details.

Acknowledgments

Vittorio Ferrari was supported by the ERC Starting Grant VisCul. Radu Tudor Ionescu was supported by the POSDRU/159/1.5/S/137750 Grant. Marius Leordeanu was supported by project number PNII PCE-2012-4-0581.